Model Coupling IRENE: Difference between revisions

No edit summary (change visibility) |

No edit summary (change visibility) |

||

| (7 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

<div class="title">Test Case: Hurricane Irene</div>__NOTOC__ | <div class="title">Test Case: Hurricane Irene</div>__NOTOC__ | ||

<!-- Edit Template: | <!-- Edit Template:Coupling_Examples_TOC to modify this Table of Contents--> | ||

<div style="float: left;margin: 0 20px 0 0;">{{ | <div style="float: left;margin: 0 20px 0 0;">{{ Coupling Examples TOC}}</div>__TOC__ | ||

<div style="clear: both"></div> | <div style="clear: both"></div> | ||

{{warning}} '''Warning:''' This is quite a complex test using several advanced features of '''ROMS'''. It is intended for expert | {{warning}} '''Warning:''' This is quite a complex test using several advanced features of '''ROMS'''. It is intended for expert '''ROMS''' users. If you are a '''ROMS''' beginner, we highly recommend staying away from this test case until you gain substantial expertise with simpler test cases and applications. | ||

==Introduction== | ==Introduction== | ||

Hurricane Irene was | Hurricane Irene is used for testing '''ROMS''' coupling infra-structure with the '''ESMF'''/'''NUOPC''' library. It includes examples for running atmosphere-ocean coupling, weakly coupled atmosphere-ocean data assimilation with 4D-Var only in the '''ROMS''' component, and forward solution with observations verification. Hurricane Irene was the first major hurricane of the 2011 Atlantic hurricane season, as shown below. | ||

The WRF and ROMS | [[Image:2011_Atlantic_hurricane_tracks.png|center|800px]] | ||

[[Image:Hurricane_Irene_track.png|center|800px]] | |||

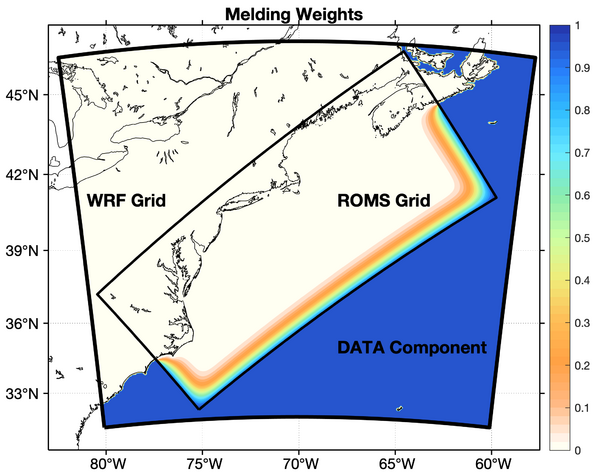

The coupled '''DATA'''-'''WRF'''-'''ROMS''' system runs for only 42 hours as Hurricane Irene approaches the U.S. East Coast. The simulations are started on 2011-08-27 06:00:00 GMT. The '''WRF''' and '''ROMS''' grids are incongruent, as shown below, so the '''DATA''' model provides the SST values to '''WRF''' at locations not covered by the '''ROMS''' grid. In this case, SST is exported from '''ROMS''' and '''DATA''' components to '''WRF''', which imports the regridded values and then melds both SST fields. The '''DATA''' component is usually a NetCDF file containing SST data snapshots from satellite, climatology, or a global model forecast/hindcast like '''HyCOM'''. | |||

==Grid Setup and Melding== | ==Grid Setup and Melding== | ||

The WRF | The '''WRF''' 6km resolution grid occupies a larger area than the '''ROMS''' ~7 km resolution domain. The '''DATA''' component consists of a NetCDF file containing 3-hour SST snapshots extracted from the '''HyCOM GLBu0.08''' hindcast dataset. The '''NUOPC''' ''cap'' file for '''WRF''' melds the imported, regridded SST using the following equation: | ||

<math display="block">\hbox{SST}_\hbox{ATM}(:,:)=W_\hbox{ROMS}(:,:)*\hbox{SST}_\hbox{ROMS}(:,:)+W_\hbox{DATA}(:,:)*\hbox{SST}_\hbox{DATA}(:,:)</math> | |||

where <math> | where <math>W_\hbox{ROMS}(:,:)+W_\hbox{DATA}(:,:)=1.0</math> are the weight coefficients that guarantee a smooth transition between the '''ROMS''' and '''DATA''' values, as illustrated below. | ||

[[File:melding_weights_IRENE.png|600px]] | [[File:melding_weights_IRENE.png|center|600px]] | ||

These | These weights are computed and plotted using the script '''coupling/wrf_weights.m''' from the '''ROMS''' Matlab repository. | ||

==ROMS Driver== | ==ROMS Driver== | ||

The design of the ROMS coupling interface with the ESMF/NUOPC library allows both driver and component modes of operation. This test case uses the driver mode, meaning that ROMS is the main program that provides all the interfaces and logistics to couple to other '''ESM''' components. In addition, it provides '''NUOPC'''-based generic '''ESM''' component services, interaction between gridded components in terms of '''NUOPC''' cap files, connectors between components for the regridding of source and destination fields (esmf_coupler.h), input scripts, and coupling metadata management. | The design of the '''ROMS''' coupling interface with the '''ESMF/NUOPC''' library allows both driver and component modes of operation. This test case uses the driver mode, meaning that '''ROMS''' is the main program that provides all the interfaces and logistics to couple to other '''ESM''' components. In addition, it provides '''NUOPC'''-based generic '''ESM''' component services, interaction between gridded components in terms of '''NUOPC''' ''cap'' files, connectors between components for the regridding of source and destination fields ('''esmf_coupler.h'''), input scripts, and coupling metadata management. | ||

For more details about '''driver''' and '''component''' modes, check the [[Model_Coupling_ESMF|ESMF wiki page]]. | For more details about '''driver''' and '''component''' modes, check the [[Model_Coupling_ESMF|ESMF wiki page]]. | ||

| Line 36: | Line 37: | ||

==Run Sequence== | ==Run Sequence== | ||

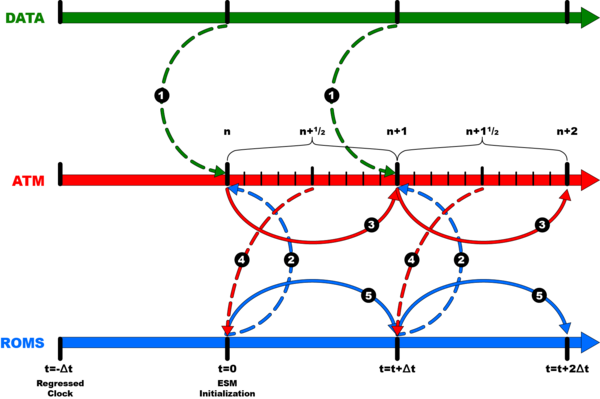

The ESMF RunSequence configuration file sets how the ESM components are connected and coupled. All the components interact with the same or different coupling time step. Usually, the connector from ROMS to ATM is explicit, whereas the connector from ATM to ROMS is semi-implicit. Often, the timestep of the atmosphere kernel is smaller than that for the ocean. Therefore, it is advantageous for the ATM model to export time-averaged fields over the coupling interval, which is the same as the ROMS timestep. It is semi-implicit because ROMS right-hand-side terms are forced with n+1/2 ATM fields because of the time-averaging. The following diagram shows the explicit and semi-implicit coupling for a DATA- | The '''ESMF''' RunSequence configuration file sets how the '''ESM''' components are connected and coupled. All the components interact with the same or different coupling time step. Usually, the connector from '''ROMS''' to '''ATM''' is explicit, whereas the connector from '''ATM''' to '''ROMS''' is semi-implicit. Often, the timestep of the atmosphere kernel is smaller than that for the ocean. Therefore, it is advantageous for the '''ATM''' model to export time-averaged fields over the coupling interval, which is the same as the '''ROMS''' timestep. It is semi-implicit because '''ROMS''' right-hand-side terms are forced with ''n+1/2'' '''ATM''' fields because of the time-averaging. In this application, the '''WRF''' and '''ROMS''' timesteps are 20 and 60 seconds, respectively, for stable solutions due to the strong hurricane winds. The coupling step is 60 seconds (same as '''ROMS'''). The '''WRF''' values are averaged every 60 seconds by activating its '''RAMS''' averaged diagnostics package. | ||

The following diagram shows the explicit and semi-implicit coupling for a '''DATA'''-'''WRF'''-'''ROMS''' system: | |||

{| | {| | ||

| Line 49: | Line 51: | ||

* To download WRF and WPS version 4.3, you may use:<div class="box">> git clone https://github.com/wrf-model/WRF WRF.4.3<br />> cd WRF.4.3<br />> git checkout tags/v4.3<br /><br />> git clone https://github.com/wrf-model/WPS WPS.4.3<br />> cd WPS.4.3<br />> git checkout tags/v4.3</div> | * To download WRF and WPS version 4.3, you may use:<div class="box">> git clone https://github.com/wrf-model/WRF WRF.4.3<br />> cd WRF.4.3<br />> git checkout tags/v4.3<br /><br />> git clone https://github.com/wrf-model/WPS WPS.4.3<br />> cd WPS.4.3<br />> git checkout tags/v4.3</div> | ||

* Configure the build_wrf.csh (or build_wrf.sh) script: | * Configure the <span class="red">build_wrf.csh</span> (or <span class="red">build_wrf.sh</span>) script: | ||

** set the '''ROMS_SRC_DIR''' to the location of you ROMS source code and '''WRF_ROOT_DIR''' to location of your WRF source code. | ** set the '''ROMS_SRC_DIR''' to the location of you ROMS source code and '''WRF_ROOT_DIR''' to location of your WRF source code. | ||

** Make sure '''which_MPI''' and '''FORT''' are set appropriately. | ** Make sure '''which_MPI''' and '''FORT''' are set appropriately. | ||

''' | |||

* We want to have the compiled WRF objects outside the WRF source code directory to make incorporating WRF into the coupled system easier. To do this, we use the build_wrf.csh (or build_wrf.sh) '''-move''' option. For example:<div class="box">> build_wrf.csh -j 10 -move</div> | * We want to have the compiled '''WRF''' objects outside the '''WRF''' source code directory to make incorporating '''WRF''' into the coupled system easier. To do this, we use the <span class="red">build_wrf.csh</span> (or <span class="red">build_wrf.sh</span>) '''-move''' option. For example:<div class="box">> build_wrf.csh -j 10 -move</div> | ||

** Choose the appropriate "(dmpar)" option for your compiler. For Example, we choose 15 from the list below to compile with the Intel compilers:<div class="box"> 1. (serial) 2. (smpar) 3. (dmpar) 4. (dm+sm) PGI (pgf90/gcc)<br /> 5. (serial) 6. (smpar) 7. (dmpar) 8. (dm+sm) PGI (pgf90/pgcc): SGI MPT<br /> 9. (serial) 10. (smpar) 11. (dmpar) 12. (dm+sm) PGI (pgf90/gcc): PGI accelerator<br /> 13. (serial) 14. (smpar) 15. (dmpar) 16. (dm+sm) INTEL (ifort/icc)<br /> 17. (dm+sm) INTEL (ifort/icc): Xeon Phi<br /> 18. (serial) 19. (smpar) 20. (dmpar) 21. (dm+sm) INTEL (ifort/icc): Xeon (SNB with AVX mods)<br /> 22. (serial) 23. (smpar) 24. (dmpar) 25. (dm+sm) INTEL (ifort/icc): SGI MPT<br /> 26. (serial) 27. (smpar) 28. (dmpar) 29. (dm+sm) INTEL (ifort/icc): IBM POE<br /> 30. (serial) 31. (dmpar) PATHSCALE (pathf90/pathcc)<br /> 32. (serial) 33. (smpar) 34. (dmpar) 35. (dm+sm) GNU (gfortran/gcc)<br /> 36. (serial) 37. (smpar) 38. (dmpar) 39. (dm+sm) IBM (xlf90_r/cc_r)<br /> 40. (serial) 41. (smpar) 42. (dmpar) 43. (dm+sm) PGI (ftn/gcc): Cray XC CLE<br /> 44. (serial) 45. (smpar) 46. (dmpar) 47. (dm+sm) CRAY CCE (ftn $(NOOMP)/cc): Cray XE and XC<br /> 48. (serial) 49. (smpar) 50. (dmpar) 51. (dm+sm) INTEL (ftn/icc): Cray XC<br /> 52. (serial) 53. (smpar) 54. (dmpar) 55. (dm+sm) PGI (pgf90/pgcc)<br /> 56. (serial) 57. (smpar) 58. (dmpar) 59. (dm+sm) PGI (pgf90/gcc): -f90=pgf90<br /> 60. (serial) 61. (smpar) 62. (dmpar) 63. (dm+sm) PGI (pgf90/pgcc): -f90=pgf90<br /> 64. (serial) 65. (smpar) 66. (dmpar) 67. (dm+sm) INTEL (ifort/icc): HSW/BDW<br /> 68. (serial) 69. (smpar) 70. (dmpar) 71. (dm+sm) INTEL (ifort/icc): KNL MIC</div>{{note}} <span class="red">'''Note:''' If you are using Intel MPI, be sure to set '''which_MPI''' to ''intel'' in your WRF build script.</span> Otherwise, the WRF build system will use the wrong MPI compiler. | ** Choose the appropriate "(dmpar)" option for your compiler. For Example, we choose 15 from the list below to compile with the Intel compilers:<div class="box"> 1. (serial) 2. (smpar) 3. (dmpar) 4. (dm+sm) PGI (pgf90/gcc)<br /> 5. (serial) 6. (smpar) 7. (dmpar) 8. (dm+sm) PGI (pgf90/pgcc): SGI MPT<br /> 9. (serial) 10. (smpar) 11. (dmpar) 12. (dm+sm) PGI (pgf90/gcc): PGI accelerator<br /> 13. (serial) 14. (smpar) 15. (dmpar) 16. (dm+sm) INTEL (ifort/icc)<br /> 17. (dm+sm) INTEL (ifort/icc): Xeon Phi<br /> 18. (serial) 19. (smpar) 20. (dmpar) 21. (dm+sm) INTEL (ifort/icc): Xeon (SNB with AVX mods)<br /> 22. (serial) 23. (smpar) 24. (dmpar) 25. (dm+sm) INTEL (ifort/icc): SGI MPT<br /> 26. (serial) 27. (smpar) 28. (dmpar) 29. (dm+sm) INTEL (ifort/icc): IBM POE<br /> 30. (serial) 31. (dmpar) PATHSCALE (pathf90/pathcc)<br /> 32. (serial) 33. (smpar) 34. (dmpar) 35. (dm+sm) GNU (gfortran/gcc)<br /> 36. (serial) 37. (smpar) 38. (dmpar) 39. (dm+sm) IBM (xlf90_r/cc_r)<br /> 40. (serial) 41. (smpar) 42. (dmpar) 43. (dm+sm) PGI (ftn/gcc): Cray XC CLE<br /> 44. (serial) 45. (smpar) 46. (dmpar) 47. (dm+sm) CRAY CCE (ftn $(NOOMP)/cc): Cray XE and XC<br /> 48. (serial) 49. (smpar) 50. (dmpar) 51. (dm+sm) INTEL (ftn/icc): Cray XC<br /> 52. (serial) 53. (smpar) 54. (dmpar) 55. (dm+sm) PGI (pgf90/pgcc)<br /> 56. (serial) 57. (smpar) 58. (dmpar) 59. (dm+sm) PGI (pgf90/gcc): -f90=pgf90<br /> 60. (serial) 61. (smpar) 62. (dmpar) 63. (dm+sm) PGI (pgf90/pgcc): -f90=pgf90<br /> 64. (serial) 65. (smpar) 66. (dmpar) 67. (dm+sm) INTEL (ifort/icc): HSW/BDW<br /> 68. (serial) 69. (smpar) 70. (dmpar) 71. (dm+sm) INTEL (ifort/icc): KNL MIC</div>{{note}} <span class="red">'''Note:''' If you are using Intel MPI, be sure to set '''which_MPI''' to ''intel'' in your WRF build script.</span> Otherwise, the '''WRF''' build system will likely use the wrong MPI compiler. | ||

* When asked "Compile for nesting?" you will probably want to choose '''1''' for basic. | * When asked "Compile for nesting?" you will probably want to choose '''1''' for basic. | ||

* | * If the build is successful, the '''WRF''' executables will be located in the '''Build_wrf/Bin''' directory. | ||

:{{note}} '''Note:''' It is useful to define an "ltl" alias in your login script (.cshrc or .bashrc) to avoid showing all the links to data files created by the build script and needed to run WRF.<br /><br />BASH:<div class="box">alias ltl='/bin/ls -ltHF | grep -v ^l'</div>CSH/TCSH:<div class="box">alias ltl '/bin/ls -ltHF | grep -v ^l'</div> | :{{note}} '''Note:''' It is useful to define an "''ltl''" alias in your login script ('''.cshrc''' or '''.bashrc''') to avoid showing all the links to data files created by the build script and needed to run '''WRF'''.<br /><br />BASH:<div class="box">alias ltl='/bin/ls -ltHF | grep -v ^l'</div>CSH/TCSH:<div class="box">alias ltl '/bin/ls -ltHF | grep -v ^l'</div> | ||

==Configuring and Compiling ROMS== | ==Configuring and Compiling ROMS== | ||

'''ROMS''' is the driver of the coupling system. In this application the '''WRF''' surface Boundary Layer (SBL) formulation is used to compute the atmospheric fluxes. Therefore, bulk_flux = 0 in either <span class="red">build_roms.csh</span> or <span class="red">build_roms.sh</span>. | '''ROMS''' is the driver of the coupling system. In this application the '''WRF''' surface Boundary Layer (SBL) formulation is used to compute the atmospheric fluxes. Therefore, bulk_flux = 0 in either <span class="red">build_roms.csh</span> or <span class="red">build_roms.sh</span>. | ||

Notice that bulk_flux = 1 activates ROMS CPP options: [[Options#BULK_FLUXES|BULK_FLUXES]], [[Options#COOL_SKIN|COOL_SKIN]], [[Options#WIND_MINUS_CURRENT|WIND_MINUS_CURRENT]], [[Options#EMINUSP|EMINUSP]], and [[Options#LONGWAVE_OUT|LONGWAVE_OUT]]. The option bulk_flux = 1 in the ROMS build script '''IS NOT RECOMMENDED FOR THIS APPLICATION''' because the [[bulk_flux.F]] module is not tuned for Hurricane regimes, and will get the wrong solution. | Notice that bulk_flux = 1 activates ROMS CPP options: [[Options#BULK_FLUXES|BULK_FLUXES]], [[Options#COOL_SKIN|COOL_SKIN]], [[Options#WIND_MINUS_CURRENT|WIND_MINUS_CURRENT]], [[Options#EMINUSP|EMINUSP]], and [[Options#LONGWAVE_OUT|LONGWAVE_OUT]]. The option bulk_flux = 1 in the '''ROMS''' build script '''<span class="red">IS NOT RECOMMENDED FOR THIS APPLICATION</span>''' because the [[bulk_flux.F]] module is not tuned for Hurricane regimes, and will get the wrong solution. | ||

{{note}} '''Note:''' The following important CPP options are activated in <span class="red">build_roms.csh</span> or <span class="red">build_roms.sh</span>: | {{note}} '''Note:''' The following important CPP options are activated in <span class="red">build_roms.csh</span> or <span class="red">build_roms.sh</span>: | ||

| Line 76: | Line 78: | ||

* Correctly set '''MY_ROMS_SRC''', '''COMPILERS''' (if necessary), '''which_MPI''', and '''FORT''' in <span class="red">build_roms.csh</span> or <span class="red">build_roms.sh</span>. | * Correctly set '''MY_ROMS_SRC''', '''COMPILERS''' (if necessary), '''which_MPI''', and '''FORT''' in <span class="red">build_roms.csh</span> or <span class="red">build_roms.sh</span>. | ||

* Compile ROMS with:<div class="box">> build_roms.csh -j 10</div> | * Compile '''ROMS''' with:<div class="box">> build_roms.csh -j 10</div> | ||

* Customize <span class="red">submit.sh</span> for use on your system. | * Customize <span class="red">submit.sh</span> for use on your system. | ||

| Line 87: | Line 89: | ||

* Standard output files:<div class="box">log.coupler coupler information<br />log.esmf ESMF/NUOPC informatiom<br />log.roms ROMS standard output<br />log.wrf WRF standard error/output<br />namelist.output WRF configuration parameters</div> | * Standard output files:<div class="box">log.coupler coupler information<br />log.esmf ESMF/NUOPC informatiom<br />log.roms ROMS standard output<br />log.wrf WRF standard error/output<br />namelist.output WRF configuration parameters</div> | ||

* ROMS NetCDF files:<div class="box">irene_avg.nc 6-hour averages<br />irene_his.nc hourly history<br />irene_mod_20110827.nc model at observation locations<br />irene_qck.nc hourly surface fields quick save<br />irene_rst.nc restart</div> | * '''ROMS''' NetCDF files:<div class="box">irene_avg.nc 6-hour averages<br />irene_his.nc hourly history<br />irene_mod_20110827.nc model at observation locations<br />irene_qck.nc hourly surface fields quick save<br />irene_rst.nc restart</div> | ||

* WRF NetCDF files:<div class="box">irene_wrf_his_d01_2011-08-27_06_00_00.nc hourly history</div> | * '''WRF''' NetCDF files:<div class="box">irene_wrf_his_d01_2011-08-27_06_00_00.nc hourly history</div> | ||

==Results== | |||

{|align=center width=1000 | |||

|[[Image:wrf_2m_temp_surf_pres.png|1000px]] | |||

|- | |||

|The panel on the left is the '''WRF''' potential temperature at 2m and the right is the surface pressure (mb) for 2011-08-27 17:00:00 after Hurricane Irene makes landfall on the Outer Banks of North Carolina. | |||

|} | |||

Latest revision as of 18:26, 1 March 2022

| Coupling Examples TOC |

|---|

| 2. Earth System Modeling Framework (ESMF/NUOPC) |

| a. Test Case: Hurricane Irene |

| b. Test Case: Hurricane Sandy |

| c. Test Case: California Current System |

| 3. Model Coupling Toolkit (MCT) |

![]() Warning: This is quite a complex test using several advanced features of ROMS. It is intended for expert ROMS users. If you are a ROMS beginner, we highly recommend staying away from this test case until you gain substantial expertise with simpler test cases and applications.

Warning: This is quite a complex test using several advanced features of ROMS. It is intended for expert ROMS users. If you are a ROMS beginner, we highly recommend staying away from this test case until you gain substantial expertise with simpler test cases and applications.

Introduction

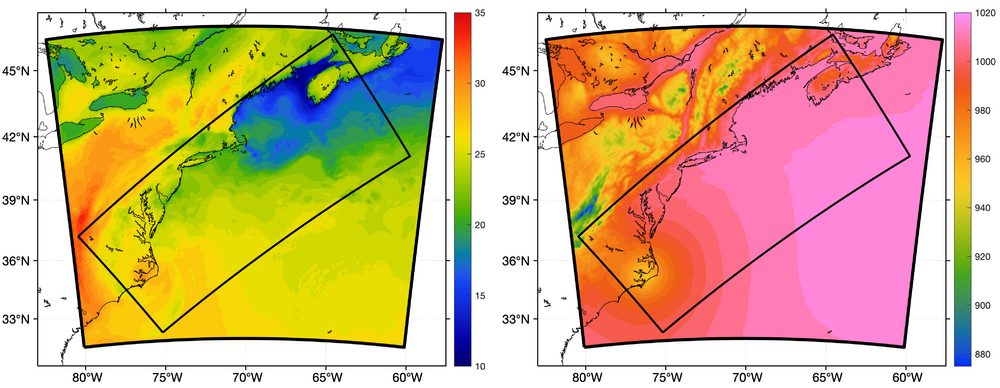

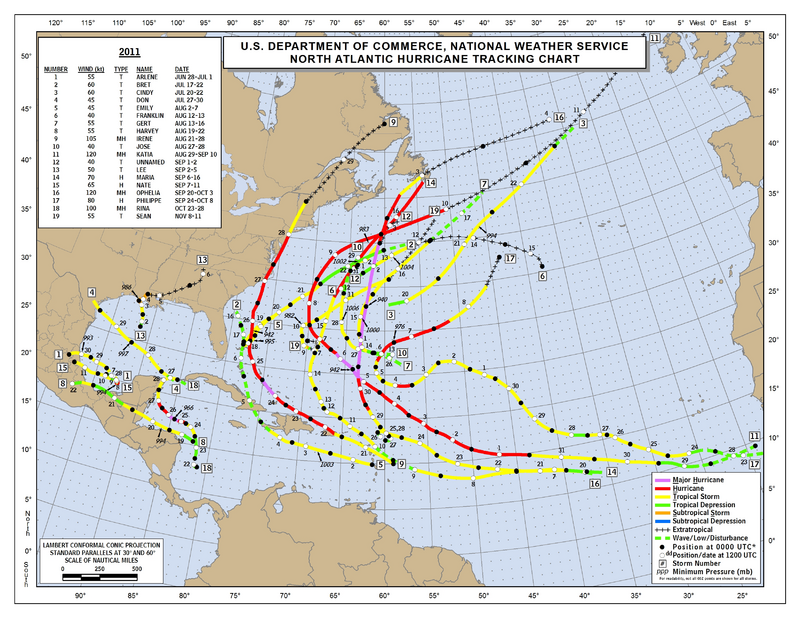

Hurricane Irene is used for testing ROMS coupling infra-structure with the ESMF/NUOPC library. It includes examples for running atmosphere-ocean coupling, weakly coupled atmosphere-ocean data assimilation with 4D-Var only in the ROMS component, and forward solution with observations verification. Hurricane Irene was the first major hurricane of the 2011 Atlantic hurricane season, as shown below.

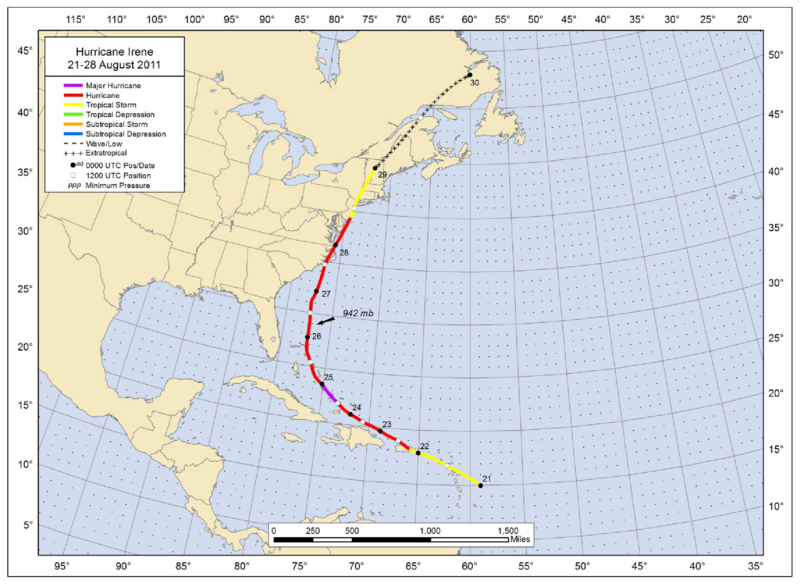

The coupled DATA-WRF-ROMS system runs for only 42 hours as Hurricane Irene approaches the U.S. East Coast. The simulations are started on 2011-08-27 06:00:00 GMT. The WRF and ROMS grids are incongruent, as shown below, so the DATA model provides the SST values to WRF at locations not covered by the ROMS grid. In this case, SST is exported from ROMS and DATA components to WRF, which imports the regridded values and then melds both SST fields. The DATA component is usually a NetCDF file containing SST data snapshots from satellite, climatology, or a global model forecast/hindcast like HyCOM.

Grid Setup and Melding

The WRF 6km resolution grid occupies a larger area than the ROMS ~7 km resolution domain. The DATA component consists of a NetCDF file containing 3-hour SST snapshots extracted from the HyCOM GLBu0.08 hindcast dataset. The NUOPC cap file for WRF melds the imported, regridded SST using the following equation:

where are the weight coefficients that guarantee a smooth transition between the ROMS and DATA values, as illustrated below.

These weights are computed and plotted using the script coupling/wrf_weights.m from the ROMS Matlab repository.

ROMS Driver

The design of the ROMS coupling interface with the ESMF/NUOPC library allows both driver and component modes of operation. This test case uses the driver mode, meaning that ROMS is the main program that provides all the interfaces and logistics to couple to other ESM components. In addition, it provides NUOPC-based generic ESM component services, interaction between gridded components in terms of NUOPC cap files, connectors between components for the regridding of source and destination fields (esmf_coupler.h), input scripts, and coupling metadata management.

For more details about driver and component modes, check the ESMF wiki page.

Run Sequence

The ESMF RunSequence configuration file sets how the ESM components are connected and coupled. All the components interact with the same or different coupling time step. Usually, the connector from ROMS to ATM is explicit, whereas the connector from ATM to ROMS is semi-implicit. Often, the timestep of the atmosphere kernel is smaller than that for the ocean. Therefore, it is advantageous for the ATM model to export time-averaged fields over the coupling interval, which is the same as the ROMS timestep. It is semi-implicit because ROMS right-hand-side terms are forced with n+1/2 ATM fields because of the time-averaging. In this application, the WRF and ROMS timesteps are 20 and 60 seconds, respectively, for stable solutions due to the strong hurricane winds. The coupling step is 60 seconds (same as ROMS). The WRF values are averaged every 60 seconds by activating its RAMS averaged diagnostics package. The following diagram shows the explicit and semi-implicit coupling for a DATA-WRF-ROMS system:

|

| Semi-Implicit, ATM Average |

Downloading and Compiling WRF

- To download WRF and WPS version 4.3, you may use:> git clone https://github.com/wrf-model/WRF WRF.4.3

> cd WRF.4.3

> git checkout tags/v4.3

> git clone https://github.com/wrf-model/WPS WPS.4.3

> cd WPS.4.3

> git checkout tags/v4.3

- Configure the build_wrf.csh (or build_wrf.sh) script:

- set the ROMS_SRC_DIR to the location of you ROMS source code and WRF_ROOT_DIR to location of your WRF source code.

- Make sure which_MPI and FORT are set appropriately.

- We want to have the compiled WRF objects outside the WRF source code directory to make incorporating WRF into the coupled system easier. To do this, we use the build_wrf.csh (or build_wrf.sh) -move option. For example:> build_wrf.csh -j 10 -move

- Choose the appropriate "(dmpar)" option for your compiler. For Example, we choose 15 from the list below to compile with the Intel compilers:1. (serial) 2. (smpar) 3. (dmpar) 4. (dm+sm) PGI (pgf90/gcc)

5. (serial) 6. (smpar) 7. (dmpar) 8. (dm+sm) PGI (pgf90/pgcc): SGI MPT

9. (serial) 10. (smpar) 11. (dmpar) 12. (dm+sm) PGI (pgf90/gcc): PGI accelerator

13. (serial) 14. (smpar) 15. (dmpar) 16. (dm+sm) INTEL (ifort/icc)

17. (dm+sm) INTEL (ifort/icc): Xeon Phi

18. (serial) 19. (smpar) 20. (dmpar) 21. (dm+sm) INTEL (ifort/icc): Xeon (SNB with AVX mods)

22. (serial) 23. (smpar) 24. (dmpar) 25. (dm+sm) INTEL (ifort/icc): SGI MPT

26. (serial) 27. (smpar) 28. (dmpar) 29. (dm+sm) INTEL (ifort/icc): IBM POE

30. (serial) 31. (dmpar) PATHSCALE (pathf90/pathcc)

32. (serial) 33. (smpar) 34. (dmpar) 35. (dm+sm) GNU (gfortran/gcc)

36. (serial) 37. (smpar) 38. (dmpar) 39. (dm+sm) IBM (xlf90_r/cc_r)

40. (serial) 41. (smpar) 42. (dmpar) 43. (dm+sm) PGI (ftn/gcc): Cray XC CLE

44. (serial) 45. (smpar) 46. (dmpar) 47. (dm+sm) CRAY CCE (ftn $(NOOMP)/cc): Cray XE and XC

48. (serial) 49. (smpar) 50. (dmpar) 51. (dm+sm) INTEL (ftn/icc): Cray XC

52. (serial) 53. (smpar) 54. (dmpar) 55. (dm+sm) PGI (pgf90/pgcc)

56. (serial) 57. (smpar) 58. (dmpar) 59. (dm+sm) PGI (pgf90/gcc): -f90=pgf90

60. (serial) 61. (smpar) 62. (dmpar) 63. (dm+sm) PGI (pgf90/pgcc): -f90=pgf90

64. (serial) 65. (smpar) 66. (dmpar) 67. (dm+sm) INTEL (ifort/icc): HSW/BDW

68. (serial) 69. (smpar) 70. (dmpar) 71. (dm+sm) INTEL (ifort/icc): KNL MIC Note: If you are using Intel MPI, be sure to set which_MPI to intel in your WRF build script. Otherwise, the WRF build system will likely use the wrong MPI compiler.

Note: If you are using Intel MPI, be sure to set which_MPI to intel in your WRF build script. Otherwise, the WRF build system will likely use the wrong MPI compiler.

- Choose the appropriate "(dmpar)" option for your compiler. For Example, we choose 15 from the list below to compile with the Intel compilers:

- When asked "Compile for nesting?" you will probably want to choose 1 for basic.

- If the build is successful, the WRF executables will be located in the Build_wrf/Bin directory.

Note: It is useful to define an "ltl" alias in your login script (.cshrc or .bashrc) to avoid showing all the links to data files created by the build script and needed to run WRF.

Note: It is useful to define an "ltl" alias in your login script (.cshrc or .bashrc) to avoid showing all the links to data files created by the build script and needed to run WRF.

BASH:alias ltl='/bin/ls -ltHF | grep -v ^l'CSH/TCSH:alias ltl '/bin/ls -ltHF | grep -v ^l'

Configuring and Compiling ROMS

ROMS is the driver of the coupling system. In this application the WRF surface Boundary Layer (SBL) formulation is used to compute the atmospheric fluxes. Therefore, bulk_flux = 0 in either build_roms.csh or build_roms.sh.

Notice that bulk_flux = 1 activates ROMS CPP options: BULK_FLUXES, COOL_SKIN, WIND_MINUS_CURRENT, EMINUSP, and LONGWAVE_OUT. The option bulk_flux = 1 in the ROMS build script IS NOT RECOMMENDED FOR THIS APPLICATION because the bulk_flux.F module is not tuned for Hurricane regimes, and will get the wrong solution.

![]() Note: The following important CPP options are activated in build_roms.csh or build_roms.sh:

Note: The following important CPP options are activated in build_roms.csh or build_roms.sh:

- IRENE ROMS application CPP option

DATA_COUPLING Activates DATA component

ESMF_LIB ESMF/NUOPC coupling library (version 8.0 and up)

FRC_COUPLING Activates surface forcing from coupled system

ROMS_STDOUT ROMS standard output is written into 'log.roms'

VERIFICATION Interpolates ROMS solution at observation points

WRF_COUPLING Activates WRF component (version 4.1 and up)

WRF_TIMEAVG WRF exporting 60 sec time-averaged fields

- Correctly set MY_ROMS_SRC, COMPILERS (if necessary), which_MPI, and FORT in build_roms.csh or build_roms.sh.

- Compile ROMS with:> build_roms.csh -j 10

- Customize submit.sh for use on your system.

- If your system uses a scheduler like SLURM:> sbatch submit.sh

- If your system does not have a scheduler and your shell is BASH:> submit.sh > log 2>&1 &

- If your system does not have a scheduler and your shell is CSH/TCSH:> submit.sh > & log &

- If your system uses a scheduler like SLURM:

Output Files

- Standard output files:log.coupler coupler information

log.esmf ESMF/NUOPC informatiom

log.roms ROMS standard output

log.wrf WRF standard error/output

namelist.output WRF configuration parameters

- ROMS NetCDF files:irene_avg.nc 6-hour averages

irene_his.nc hourly history

irene_mod_20110827.nc model at observation locations

irene_qck.nc hourly surface fields quick save

irene_rst.nc restart

- WRF NetCDF files:irene_wrf_his_d01_2011-08-27_06_00_00.nc hourly history