Difference between revisions of "I4DVAR ANA SENSITIVITY"

m (Text replacement - "IS4DVAR" to "I4DVAR") (change visibility) |

|||

| Line 3: | Line 3: | ||

__TOC__ | __TOC__ | ||

==Mathematical Formulation== | ==Mathematical Formulation== | ||

The mathematical development presented here closely parallels that of [[Bibliography#ZhuY_2008a | Zhu and Gelaro (2008)]]. The sensitivity of any functional, <math>\cal J</math>, of the analysis <math>\bf\Psi_a</math> or forecast <math>\bf\Psi_f</math> can be efficiently computed using the adjoint model which yields information about the gradients of <math>{\cal J}({\bf\Psi_a})</math> and <math>{\cal J}({\bf\Psi_f})</math>. We can extent the concept of the adjoint sensitivity to compute the sensitivity of the [[ | The mathematical development presented here closely parallels that of [[Bibliography#ZhuY_2008a | Zhu and Gelaro (2008)]]. The sensitivity of any functional, <math>\cal J</math>, of the analysis <math>\bf\Psi_a</math> or forecast <math>\bf\Psi_f</math> can be efficiently computed using the adjoint model which yields information about the gradients of <math>{\cal J}({\bf\Psi_a})</math> and <math>{\cal J}({\bf\Psi_f})</math>. We can extent the concept of the adjoint sensitivity to compute the sensitivity of the [[I4DVAR]] cost function, <math>J</math>, in (1) and any other function <math>\cal J</math> of the forecast <math>\bf\Psi_f</math> to the observations, <math>\bf y</math>. | ||

In [[ | In [[I4DVAR]], we define a quadratic cost function: | ||

{| class="eqno" | {| class="eqno" | ||

| Line 23: | Line 23: | ||

where <math>\psi_a</math> is referred to as the analysis increment, the desired solution of the incremental data assimilation procedure. | where <math>\psi_a</math> is referred to as the analysis increment, the desired solution of the incremental data assimilation procedure. | ||

The [[ | The [[I4DVAR]] analysis can be written in the more traditional form as <math>{\bf\Psi_a} = {\bf\Psi_b} + {\bf K} {\bf d}</math> ([[Bibliography#DaleyR_1991a | Daley, 1991]]), where <math>\psi_a = {\bf K} {\bf d}</math>, the Kalman gain matrix <math>{\bf K} = {\cal H}^{-1} {\bf G}^T {\bf O}^{-1}</math>, and <math>{\cal H} = {\partial^{2}J}/{\partial\psi^2} = {\bf B}^{-1} {\bf G}^T {\bf O}^{-1} {\bf G}</math> is the Hessian matrix. The entire [[I4DVAR]] procedure is therefore neatly embodied in <math>\bf K</math>. At the cost function minimum, (1) can be written as <math>J = \frac{1}{2} {\bf d}^T ({\bf I} - {\bf K}^T {\bf G}^T) {\bf O}^{-1} {\bf d}</math> which yields the sensitivity of the [[I4DVAR]] cost function to the observations. | ||

In the current [[ | In the current [[I4DVAR]]/[[LANCZOS]] data assimilation algorithm, the cost function minimum of (1) is identified using the Lanczos method [[Bibliography#GolubG_1989a | (Golub and Van Loan, 1989)]], in which case: | ||

{| class="eqno" | {| class="eqno" | ||

| Line 32: | Line 32: | ||

|} | |} | ||

where <math>{\bf Q}_k = ({\bf q}_i)</math> is the matrix of <math>k</math> orthogonal Lanczos vectors, and <math>{\bf T}_k</math> is a known tridiagonal matrix. Each of the <math>k-</math>iterations of [[ | where <math>{\bf Q}_k = ({\bf q}_i)</math> is the matrix of <math>k</math> orthogonal Lanczos vectors, and <math>{\bf T}_k</math> is a known tridiagonal matrix. Each of the <math>k-</math>iterations of [[I4DVAR]] employed in finding the minimum of <math>J</math> yields one column <math>{\bf q}_i</math> of <math>{\bf Q}_k</math>. From (3) we can identify the Kalman gain matrix as <math>{\bf K} = - {\bf Q}_k {\bf T}_{k}^{-1} {\bf Q}_{k}^{T} {\bf G}^T {\bf O}^{-1}</math> in which case <math>{\bf K}^T = - {\bf O}^{-1} {\bf G} {\bf Q}_k {\bf T}_{k}^{-1} {\bf Q}_{k}^{T}</math> represents the adjoint of the entire [[I4DVAR]] system. The action of <math>{\bf K}^T</math> on the vector <math>{\bf G}^T {\bf O}^{-1} {\bf d}</math> in (3) can be readily computed since the Lanczos vectors <math>{\bf q}_i</math> and the matrix <math>{\bf T}_k</math> are available at the end of each [[I4DVAR]] assimilation cycle. | ||

Therefore, the basic observation sensitivity algorithm is as follows: | Therefore, the basic observation sensitivity algorithm is as follows: | ||

| Line 48: | Line 48: | ||

|} | |} | ||

which again can be readily evaluated using the adjoint model denoted by <math>{\bf R}^{T}(t,t_0+\tau)</math> and the adjoint of [[ | which again can be readily evaluated using the adjoint model denoted by <math>{\bf R}^{T}(t,t_0+\tau)</math> and the adjoint of [[I4DVAR]], <math>{\bf K}^{T}</math>. | ||

==Technical Description== | ==Technical Description== | ||

This ROMS driver can be used to evaluate the impact of each observation on the [[ | This ROMS driver can be used to evaluate the impact of each observation on the [[I4DVAR]] data assimilation algorithm by measuring their sensitivity over a specified circulation functional index, <math>{\cal J}</math>. This algorithm is an extension of the adjoint sensitivity driver, [[AD_SENSITIVITY]]. As mentioned above, this algorithm is equivalent to taking the adjoint of the [[I4DVAR]] algorithm. | ||

The following steps are carried out in [[obs_sen_is4dvar.h]]: | The following steps are carried out in [[obs_sen_is4dvar.h]]: | ||

| Line 58: | Line 58: | ||

<ol style="list-style-type:lower-roman"> | <ol style="list-style-type:lower-roman"> | ||

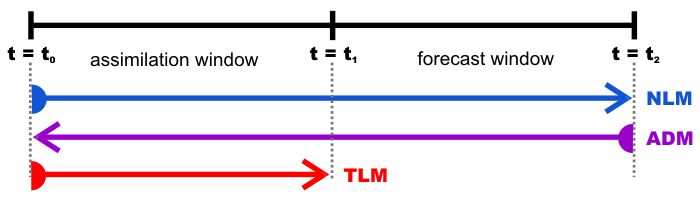

<li>We begin by running the incremental strong constraint 4DVar data assimilation algorithm ([[ | <li>We begin by running the incremental strong constraint 4DVar data assimilation algorithm ([[I4DVAR]]) with the Lanczos conjugate gradient minimization algorithm ([[LANCZOS]]) using <math>k</math> inner-loops (<math>\text{Ninner}=k</math>) and a single outer-loop (<math>\text{Nouter}=1</math>) for the period <math>t=[t_0,t_1]</math>. We will denote by <math>{\bf\Psi_b}(t_0)</math> the background initial condition, and the observations vector by <math>\bf y</math>. The resulting Lanczos vectors that we save in the adjoint NetCDF file will be denoted by <math>{\bf q}_i</math>, where <math>i=1,2,\ldots,k</math>.</li> | ||

<li>Next we run the nonlinear model for the combined assimilation plus forecast period <math>t=[t_0,t_2]</math>, where <math>t_2\geq t_1</math>. This represents the final sweep of the nonlinear model for the period <math>t=[t_0,t_1]</math> after exiting the inner-loop in the [[ | <li>Next we run the nonlinear model for the combined assimilation plus forecast period <math>t=[t_0,t_2]</math>, where <math>t_2\geq t_1</math>. This represents the final sweep of the nonlinear model for the period <math>t=[t_0,t_1]</math> after exiting the inner-loop in the [[I4DVAR]] plus the forecast period <math>t=[t_1,t-2]</math>. The initial condition for the nonlinear mode at <math>t=t_0</math> is <math>{\bf\Psi_b}(t_0)</math> and not the new estimated initial conditions, <math>{\bf\Psi}(t_0) = {\bf\Psi_b}(t_0) + \psi(t_0)</math>. We save the basic state trajectory, <math>{\bf\Psi_b}(t)</math>, of this nonlinear model run for use in the adjoint sensitivity calculation next, and for use in the tangent linear model run later in step (vii). Depending on time for which the sensitivity functional <math>{\cal J}(t)</math> is defined, this will dictate <math>t_2</math>. For example, if <math>{\cal J}(t)</math> is a functional defined during the forecast interval <math>t_1<t<t_2</math>, then <math>t_2>t_1</math> for this run of the nonlinear model. However, if <math>{\cal J}(t)</math> is defined during the assimilation interval <math>t_0<t<t_1</math>, then <math>t_2=t_1</math>. That is, the definition of <math>t_2</math> should be flexible depending on the choice of <math>\cal J</math>.</li> | ||

<li>The next step involves an adjoint sensitivity calculation for the combined assimilation plus forecast period <math>t=[t_2,t_0]</math>. The basic state trajectory for this calculation will be that from the nonlinear model run in step (ii).</li> | <li>The next step involves an adjoint sensitivity calculation for the combined assimilation plus forecast period <math>t=[t_2,t_0]</math>. The basic state trajectory for this calculation will be that from the nonlinear model run in step (ii).</li> | ||

<li>After running the regular adjoint sensitivity calculation in (iii), we will have a full 3D-adjoint state vector at time <math>t=t_0</math>. Let's call this vector <math>\psi^\dagger(t_0)</math>. The next thing we want to do is to compute the dot-product of <math>\psi^\dagger(t_0)</math> with each of the Lanczos vectors from the previous [[ | <li>After running the regular adjoint sensitivity calculation in (iii), we will have a full 3D-adjoint state vector at time <math>t=t_0</math>. Let's call this vector <math>\psi^\dagger(t_0)</math>. The next thing we want to do is to compute the dot-product of <math>\psi^\dagger(t_0)</math> with each of the Lanczos vectors from the previous [[I4DVAR]] run. So if we ran [[I4DVAR]] with <math>k</math> inner-loops, we will have <math>k-</math>Lanczos vectors which we denote as <math>{\bf q}_i</math> where <math>i=1,2,\ldots,k</math>. So we will compute <math>a_i = \psi^T {\bf q}_i</math> where <math>\psi^T</math> is the transpose of the vector <math>\psi^\dagger(t_0)</math>, and <math>a_i</math> for <math>i=1,2,\ldots,k</math> are scalars, so there will be <math>k</math> of them.</li> | ||

<li>The next step is to invert the tridiagonal matrix associated with the Lanczos vectors. Let's denote this matrix as <math>\bf T</math>. So what we want to solve <math>{\bf T}{\bf b}={\bf a}</math>, where <math>\bf a</math> is the <math>k\times 1</math> vector of scalars <math>a_i</math> from step (iv), and <math>\bf b</math> is the <math>k\times 1</math> vector that we want to find. So we solve for <math>b</math> by using a tridiagonal solver.</li> | <li>The next step is to invert the tridiagonal matrix associated with the Lanczos vectors. Let's denote this matrix as <math>\bf T</math>. So what we want to solve <math>{\bf T}{\bf b}={\bf a}</math>, where <math>\bf a</math> is the <math>k\times 1</math> vector of scalars <math>a_i</math> from step (iv), and <math>\bf b</math> is the <math>k\times 1</math> vector that we want to find. So we solve for <math>b</math> by using a tridiagonal solver.</li> | ||

<li>The next step is to compute a weighted sum of the Lanczos vectors. Let's call this <math>\bf z</math>, where <math>{\bf z} = \sum_i (b_i {\bf q}_i)</math> | <li>The next step is to compute a weighted sum of the Lanczos vectors. Let's call this <math>\bf z</math>, where <math>{\bf z} = \sum_i (b_i {\bf q}_i)</math> | ||

for <math>i=1,2,\ldots,k</math>. The <math>b_i</math> are obtained from solving the tridiagonal equation in (v), and the <math>{\bf q}_i</math> are the Lanczos vectors. The vector <math>z</math> is a full-state vector and be used as an initial condition for the tangent linear model in step (vii).</li> | for <math>i=1,2,\ldots,k</math>. The <math>b_i</math> are obtained from solving the tridiagonal equation in (v), and the <math>{\bf q}_i</math> are the Lanczos vectors. The vector <math>z</math> is a full-state vector and be used as an initial condition for the tangent linear model in step (vii).</li> | ||

<li>Finally, we run the tangent linear model from <math>t=[t_0,t_1]</math> using <math>\bf z</math> from (vi) as the initial conditions. During this run of the tangent linear model, we need to read and process the observations <math>\bf y</math> that we used in the [[ | <li>Finally, we run the tangent linear model from <math>t=[t_0,t_1]</math> using <math>\bf z</math> from (vi) as the initial conditions. During this run of the tangent linear model, we need to read and process the observations <math>\bf y</math> that we used in the [[I4DVAR]] of step 1 and write the solution at the observation points and times to the [[MODname]] NetCDF file. The values that we write into this [[MODname]] are actually the values multiplied by error covariance <math>-{\bf O}^{-1}</math> assigned to each observation during the I4DVAR in step (i).</li> | ||

</ol> | </ol> | ||

Latest revision as of 18:06, 21 July 2020

- I4DVAR_ANA_SENSITIVITY (Formerly IS4DVAR_SENSITIVITY)

- Option to activate the observation impact and sensitivity driver which quantifies the impact that each observation has on the 4DVAR data assimilation system. By measuring the sensitivity of the data assimilation system to each observation, we can determine the degree to which each observation contributes to the uncertainty in the circulation estimate. This analysis can help us to determine the type of measurements that need to be made, where to observe, and when.

- required = ADJOINT, NONLINEAR, TANGENT

- related = I4DVAR, LANCZOS

- routine = obs_sen_i4dvar_analysis.h

Mathematical Formulation

The mathematical development presented here closely parallels that of Zhu and Gelaro (2008). The sensitivity of any functional, , of the analysis or forecast can be efficiently computed using the adjoint model which yields information about the gradients of and . We can extent the concept of the adjoint sensitivity to compute the sensitivity of the I4DVAR cost function, , in (1) and any other function of the forecast to the observations, .

In I4DVAR, we define a quadratic cost function:

| (1) |

where is the ocean state vector (, , , , ,) with , is the observation error and error of representativeness covariance matrix, represents the background error covariance matrix, is the innovation vector that represents the difference between the nonlinear background solution () and the observations, . Here, is an operator that samples the nonlinear model at the observation location, , is the linearization of , and the operator is referred as the tangent linear model propagator.

At the minimum of (1), the cost function gradient vanishes and:

| (2) |

where is referred to as the analysis increment, the desired solution of the incremental data assimilation procedure.

The I4DVAR analysis can be written in the more traditional form as ( Daley, 1991), where , the Kalman gain matrix , and is the Hessian matrix. The entire I4DVAR procedure is therefore neatly embodied in . At the cost function minimum, (1) can be written as which yields the sensitivity of the I4DVAR cost function to the observations.

In the current I4DVAR/LANCZOS data assimilation algorithm, the cost function minimum of (1) is identified using the Lanczos method (Golub and Van Loan, 1989), in which case:

| (3) |

where is the matrix of orthogonal Lanczos vectors, and is a known tridiagonal matrix. Each of the iterations of I4DVAR employed in finding the minimum of yields one column of . From (3) we can identify the Kalman gain matrix as in which case represents the adjoint of the entire I4DVAR system. The action of on the vector in (3) can be readily computed since the Lanczos vectors and the matrix are available at the end of each I4DVAR assimilation cycle.

Therefore, the basic observation sensitivity algorithm is as follows:

- Force the adjoint model with to yield .

- Operate on with which is equivalent to a rank approximation of the Hessian matrix.

- Integrate the results of step (ii) forward in time using the tangent linear model and save the solution . That is, the solution at observation locations.

- Multiply by to yield .

Consider now a forecast for the interval initialized from obtained from an assimilation cycle over the interval , where is the forecast lead time. In addition, consider that depends on the forecast , and which characterizes some future aspect of the forecast circulation. According to the chain rule, the sensitivity of to the observations collected during the assimilation cycle is given by:

| (4) |

which again can be readily evaluated using the adjoint model denoted by and the adjoint of I4DVAR, .

Technical Description

This ROMS driver can be used to evaluate the impact of each observation on the I4DVAR data assimilation algorithm by measuring their sensitivity over a specified circulation functional index, . This algorithm is an extension of the adjoint sensitivity driver, AD_SENSITIVITY. As mentioned above, this algorithm is equivalent to taking the adjoint of the I4DVAR algorithm.

The following steps are carried out in obs_sen_is4dvar.h:

- We begin by running the incremental strong constraint 4DVar data assimilation algorithm (I4DVAR) with the Lanczos conjugate gradient minimization algorithm (LANCZOS) using inner-loops () and a single outer-loop () for the period . We will denote by the background initial condition, and the observations vector by . The resulting Lanczos vectors that we save in the adjoint NetCDF file will be denoted by , where .

- Next we run the nonlinear model for the combined assimilation plus forecast period , where . This represents the final sweep of the nonlinear model for the period after exiting the inner-loop in the I4DVAR plus the forecast period . The initial condition for the nonlinear mode at is and not the new estimated initial conditions, . We save the basic state trajectory, , of this nonlinear model run for use in the adjoint sensitivity calculation next, and for use in the tangent linear model run later in step (vii). Depending on time for which the sensitivity functional is defined, this will dictate . For example, if is a functional defined during the forecast interval , then for this run of the nonlinear model. However, if is defined during the assimilation interval , then . That is, the definition of should be flexible depending on the choice of .

- The next step involves an adjoint sensitivity calculation for the combined assimilation plus forecast period . The basic state trajectory for this calculation will be that from the nonlinear model run in step (ii).

- After running the regular adjoint sensitivity calculation in (iii), we will have a full 3D-adjoint state vector at time . Let's call this vector . The next thing we want to do is to compute the dot-product of with each of the Lanczos vectors from the previous I4DVAR run. So if we ran I4DVAR with inner-loops, we will have Lanczos vectors which we denote as where . So we will compute where is the transpose of the vector , and for are scalars, so there will be of them.

- The next step is to invert the tridiagonal matrix associated with the Lanczos vectors. Let's denote this matrix as . So what we want to solve , where is the vector of scalars from step (iv), and is the vector that we want to find. So we solve for by using a tridiagonal solver.

- The next step is to compute a weighted sum of the Lanczos vectors. Let's call this , where for . The are obtained from solving the tridiagonal equation in (v), and the are the Lanczos vectors. The vector is a full-state vector and be used as an initial condition for the tangent linear model in step (vii).

- Finally, we run the tangent linear model from using from (vi) as the initial conditions. During this run of the tangent linear model, we need to read and process the observations that we used in the I4DVAR of step 1 and write the solution at the observation points and times to the MODname NetCDF file. The values that we write into this MODname are actually the values multiplied by error covariance assigned to each observation during the I4DVAR in step (i).

![t=[t_{0}+\tau ,\;t_{0}+\tau +t_{f}]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/ef32e8e5c9d07811ab0a73354795d9e535b9870b)

![t=[t_{0},t_{0}+\tau ]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/c96ec79c508db259febecaed385d09338efb6421)

![t=[t_{0},t_{1}]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/b9bb4f1400e596d7de145eb550cacfb84b388723)

![t=[t_{0},t_{2}]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/adaa0fcd53aefa4c52b806376bba188dd0d23e6f)

![t=[t_{1},t-2]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/84d504d58f842d25f3136fa871447740688312e5)

![t=[t_{2},t_{0}]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/222f11686198e8170a335f7850fe0770a4c3f723)