Difference between revisions of "I4DVAR"

m (Text replacement - "IS4DVAR" to "I4DVAR") (change visibility) |

|||

| Line 5: | Line 5: | ||

This memo describes a series of calculations using the new preconditioned version of the ROMS [[Options# | This memo describes a series of calculations using the new preconditioned version of the ROMS [[Options#I4DVAR|I4DVAR]] algorithm based on the Lanczos algorithm. This driver in ROMS is referred to as [[cgradient_lanczos.h]] and will become the default and only [[Options#I4DVAR|I4DVAR]] option that will be available and maintained for ROMS. | ||

The conjugate gradient solver for ROMS | The conjugate gradient solver for ROMS I4DVAR is based on the Lanczos method as described by Fisher ([[Bibliography#FisherM_1997a|1997]], [[Bibliography#FisherM_1998a|1998]]). Two versions of preconditioning are available in ROMS I4DVAR: these are "spectral" preconditioning as described by [[Bibliography#FisherM_1998|Fisher (1998)]], and "Ritz" preconditioning as described by [[Bibliography#TshimangaJ_2008a|Tshimanga ''et al.'' (2008)]]. The "spectral" and "Ritz" nomenclature used here follows that adopted by [[Bibliography#TshimangaJ_2008a|Tshimanga ''et al.'' (2008)]]. The actual implementation of both preconditioners is based on the PhD thesis of [[Bibliography#TshimangaJ_2007a|Tshimanga (2007)]]. | ||

The model configuration used in all of the following experiments is the Intra-Americas Sea with <math>40~{\rm km}</math> resolution. Details of the model set-up, forcing, boundary conditions, and | The model configuration used in all of the following experiments is the Intra-Americas Sea with <math>40~{\rm km}</math> resolution. Details of the model set-up, forcing, boundary conditions, and I4DVAR configuration are described in [[Bibliography#PowellBS_2008a|Powell ''et al.'' (2008)]]. The multivariate balance operator is not used in any of these calculations, and only the initial conditions are adjusted. Familiarity with I4DVAR and its implementation in ROMS is assumed. | ||

| Line 32: | Line 32: | ||

where <math>{\bf x}</math> represents the ROMS state-vector; <math>\delta {\bf x}^k</math> is the state-vector increment for the <math>k^{\rm th}</math> outer-loop iterate; <math>{\bf d}_b^{k-1}={\bf x}_b-{\bf x}^{k-1}</math> is the deviation of the background state-vector <math>{\bf x}_b</math> from the <math>(k-1)</math> iterate; <math>{\bf d}_o^{k-1}={\bf y}-{\cal G}({\bf x}^{k-1})</math> is the innovation vector with respect to the observations <math>{\bf y}</math> and the <math>(k-1)</math> iterate; <math>{\cal G}({\bf x})\equiv {\bf H}{\cal M}({\bf x})</math> represents nonlinear ROMS state integrated to the observation times by NLROMS (<math>{\cal M}({\bf x})</math>), and interpolated to the observation locations by the observation operator <math>{\bf H}</math> (assumed linear for convenience); and <math>{\bf G}^{k-1}</math> denotes the corresponding time integrated and interpolated increment <math>\delta {\bf x}</math> where the tangent linear model (TLROMS) is linearized about the <math>(k-1)</math> interate, <math>{\bf x}^{k-1}</math>. The matrices <math>{\bf B}</math> and <math>{\bf O}</math> are the background error and observation error covariance matrices respectively. | where <math>{\bf x}</math> represents the ROMS state-vector; <math>\delta {\bf x}^k</math> is the state-vector increment for the <math>k^{\rm th}</math> outer-loop iterate; <math>{\bf d}_b^{k-1}={\bf x}_b-{\bf x}^{k-1}</math> is the deviation of the background state-vector <math>{\bf x}_b</math> from the <math>(k-1)</math> iterate; <math>{\bf d}_o^{k-1}={\bf y}-{\cal G}({\bf x}^{k-1})</math> is the innovation vector with respect to the observations <math>{\bf y}</math> and the <math>(k-1)</math> iterate; <math>{\cal G}({\bf x})\equiv {\bf H}{\cal M}({\bf x})</math> represents nonlinear ROMS state integrated to the observation times by NLROMS (<math>{\cal M}({\bf x})</math>), and interpolated to the observation locations by the observation operator <math>{\bf H}</math> (assumed linear for convenience); and <math>{\bf G}^{k-1}</math> denotes the corresponding time integrated and interpolated increment <math>\delta {\bf x}</math> where the tangent linear model (TLROMS) is linearized about the <math>(k-1)</math> interate, <math>{\bf x}^{k-1}</math>. The matrices <math>{\bf B}</math> and <math>{\bf O}</math> are the background error and observation error covariance matrices respectively. | ||

Following [[Bibliography#WeaverAT_2003a|Weaver ''et al.'' (2003)]], a first level of inner-loop preconditioning is performed via a transformation of variable, <math>{\bf v}^k={\bf B}^{-\frac{1}{2}}\delta {\bf x}^k</math>, hereafter referred to as v-space. By default, this first level of preconditioning is always done in ROMS | Following [[Bibliography#WeaverAT_2003a|Weaver ''et al.'' (2003)]], a first level of inner-loop preconditioning is performed via a transformation of variable, <math>{\bf v}^k={\bf B}^{-\frac{1}{2}}\delta {\bf x}^k</math>, hereafter referred to as v-space. By default, this first level of preconditioning is always done in ROMS I4DVAR. ROMS I4DVAR now provides the option to perform a second level of inner-loop preconditioning. This is activated using the logical flags '''[[Variables#Lprecond|Lprecond]]''' and '''[[Variables#Lritz|Lritz]]''' in the [[s4dvar.in]] file. With '''[[Variables#Lprecond|Lprecond]]=.TRUE.''' preconditioning is activated according to the value of the flag '''[[Variables#Lritz|Lritz]]'''. With '''[[Variables#Lritz|Lritz]]=.FALSE.''' "spectral" preconditioning is performed, while with '''[[Variables#Lritz|Lritz]]=.TRUE.''', "Ritz" preconditioning is used. | ||

The second level of preconditioning is only active for <math>k>1</math> (''i.e.'' when more than 1 outer-loop is employed). During the first outer-loop only the first level preconditioner in v-space is used. When '''[[Variables#Lprecond|Lprecond]]=.TRUE.''', a new transformation of variable is performed in the inner-loops according to <math>{\bf y}_k=\prod_{j=1}^{k-1} {\bf P}_j{\bf v}^k</math> where, following Tshimanga (2007): | The second level of preconditioning is only active for <math>k>1</math> (''i.e.'' when more than 1 outer-loop is employed). During the first outer-loop only the first level preconditioner in v-space is used. When '''[[Variables#Lprecond|Lprecond]]=.TRUE.''', a new transformation of variable is performed in the inner-loops according to <math>{\bf y}_k=\prod_{j=1}^{k-1} {\bf P}_j{\bf v}^k</math> where, following Tshimanga (2007): | ||

| Line 90: | Line 90: | ||

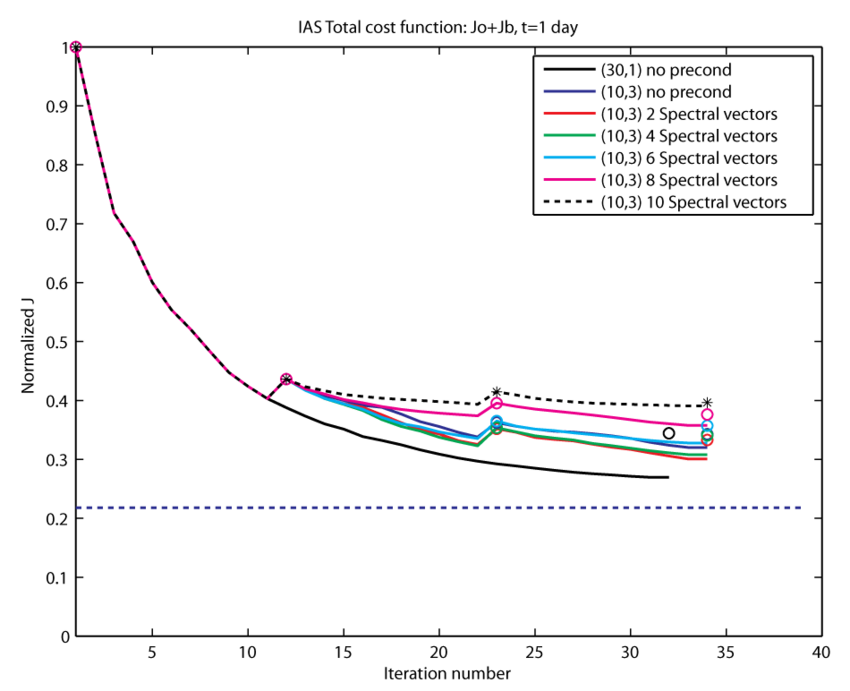

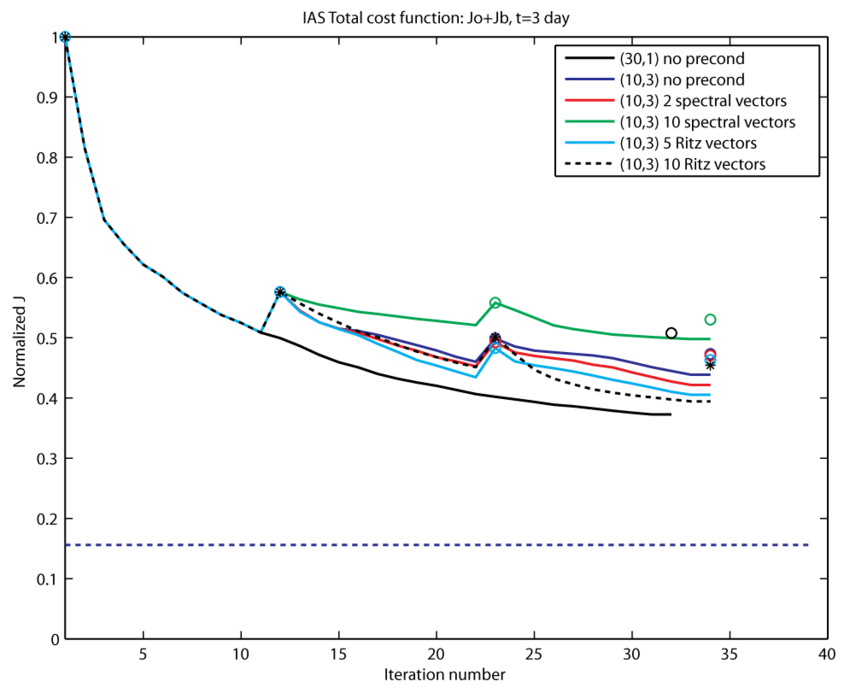

The values of normalized <math>J^k_{\rm NL}</math> are indicated in Fig. 1 as the color-coded symbols <math>\circ</math> or *. The dashed horizontal line in Fig. 1 represents the theoretical minimum value of <math>J</math> given by half the number of observations assimilated during the 1-day interval. | The values of normalized <math>J^k_{\rm NL}</math> are indicated in Fig. 1 as the color-coded symbols <math>\circ</math> or *. The dashed horizontal line in Fig. 1 represents the theoretical minimum value of <math>J</math> given by half the number of observations assimilated during the 1-day interval. | ||

For reference, two unpreconditioned | For reference, two unpreconditioned I4DVAR calculations are shown in Fig. 1 corresponding to (30,1) and (10,3). The remaining curves in Fig. 1 show the evolution of <math>J</math> for (10,3) using spectral preconditioning and different numbers of the estimated eigenvectors <math>{\bf u}_i</math> of the Hessian matrix <math>{\bf A}</math>. In all cases, the solution is identical for the first 10 iterations because second level preconditioning is only applied beyond that point. The estimated accuracy <math>\epsilon</math> varies between <math>O(10^{-6})</math> for <math>{\bf u}_1</math> and <math>{\bf u}_2</math> and <math>O(10^{-2})</math> for <math>{\bf u}_i,~~i=[2,10]</math>. Figure 1 reveals that as the number of eigenvectors used increases from 2 to 10, the values of <math>J</math> and <math>J_{\rm NL}</math> increase, indicating that using the less accurate vectors <math>{\bf u}_i</math> degrades the convergence rate of the cost function. This is in agreement with [[Bibliography#TshimangaJ_2008a|Tshimanga ''et al.'' (2008)]]. The acceptable accuracy of the eigenvectors is controlled by the ROMS input parameter [[Variables#HevecErr|HevecErr]], and the experiments in Fig. 1 suggest that [[Variables#HevecErr|HevecErr]] should be chosen to be <math> < 10^{-2}</math>. The best case shown in Fig. 1 corresponds to using 2 eigenvectors. On first inspection, Fig. 1 suggests that the unpreconditioned case (30,1) produces the best solution. This is certainly true for <math>J</math>, however for the more important measure <math>J_{\rm NL}</math>, the spectrally preconditioned (10,3) case using 2 eigenvectors is superior. | ||

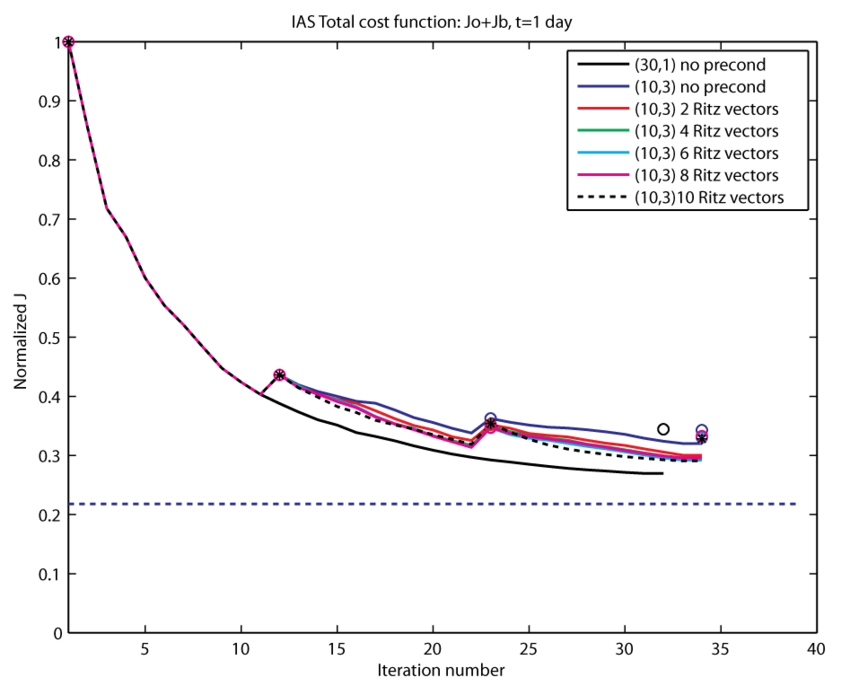

[[Image:cost_fig2.png||center|thumb|850px|'''Figure 2:''' The normalized cost function <math>J</math> computed within inner-loops (curves), and <math>J_{NL}</math> the cost function computed relative to the nonlinear model during outer-loops (open circles). The various cases shown are for a 1-day assimilation window: (30,1) no preconditioning ('''solid black line''', '''black circles'''); (10,3) no preconditioning (<span style="color:#2E3192">'''solid dark blue line'''</span>, <span style="color:#2E3192">'''dark blue circles'''</span>); (10,3) and Ritz preconditioning using 2 Ritz vectors (<span style="color:#ED1C24">'''red curve'''</span>, <span style="color:#ED1C24">'''red circles'''</span>), 4 Ritz vectors (<span style="color:#00A651">'''green curve'''</span>, <span style="color:#00A651">'''green circles'''</span>), 6 Ritz vectors (<span style="color:#00AEEF">'''turquoise line'''</span>, <span style="color:#00AEEF">'''turquoise circles'''</span>), 8 Ritz vectors (<span style="color:#EC008C">'''purple line'''</span>, <span style="color:#EC008C">'''purple circles'''</span>), and 10 Ritz vectors ('''black dashed curve''', '''black asterisks''').The horizontal <span style="color:#2E3192">'''dashed blue line'''</span> represents the theoretical minimum value of the cost function given by half the number of assimilated observations.]] | [[Image:cost_fig2.png||center|thumb|850px|'''Figure 2:''' The normalized cost function <math>J</math> computed within inner-loops (curves), and <math>J_{NL}</math> the cost function computed relative to the nonlinear model during outer-loops (open circles). The various cases shown are for a 1-day assimilation window: (30,1) no preconditioning ('''solid black line''', '''black circles'''); (10,3) no preconditioning (<span style="color:#2E3192">'''solid dark blue line'''</span>, <span style="color:#2E3192">'''dark blue circles'''</span>); (10,3) and Ritz preconditioning using 2 Ritz vectors (<span style="color:#ED1C24">'''red curve'''</span>, <span style="color:#ED1C24">'''red circles'''</span>), 4 Ritz vectors (<span style="color:#00A651">'''green curve'''</span>, <span style="color:#00A651">'''green circles'''</span>), 6 Ritz vectors (<span style="color:#00AEEF">'''turquoise line'''</span>, <span style="color:#00AEEF">'''turquoise circles'''</span>), 8 Ritz vectors (<span style="color:#EC008C">'''purple line'''</span>, <span style="color:#EC008C">'''purple circles'''</span>), and 10 Ritz vectors ('''black dashed curve''', '''black asterisks''').The horizontal <span style="color:#2E3192">'''dashed blue line'''</span> represents the theoretical minimum value of the cost function given by half the number of assimilated observations.]] | ||

| Line 121: | Line 121: | ||

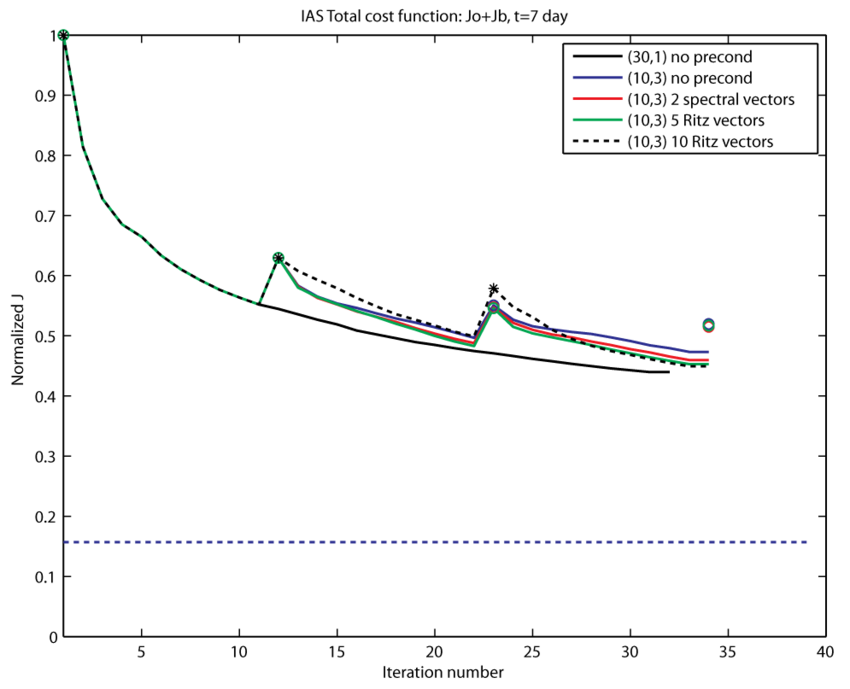

[[Image:cost_fig6.png||center|thumb|850px|'''Figure 6:''' The normalized cost function <math>J</math> computed within inner-loops (curves), and <math>J_{NL}</math> the cost function computed relative to the nonlinear model during outer-loops (open circles). The various cases shown are for a 7-day assimilation window: (30,1) no preconditioning ('''solid black curve''', '''black circles'''); (10,3) no preconditioning (<span style="color:#2E3192">'''solid dark blue line'''</span>, <span style="color:#2E3192">'''dark blue circles'''</span>); (10,3) and spectral preconditioning using 2 eigenvectors (<span style="color:#ED1C24">'''red curve'''</span>, <span style="color:#ED1C24">'''red circles'''</span>), (10,3) and Ritz preconditioning using 5 Ritz vectors (<span style="color:#00A651">'''green curve'''</span>, <span style="color:#00A651">'''green circles'''</span>); and 10 Ritz vectors ('''black dashed curve''', '''black asterisks'''). The horizontal <span style="color:#2E3192">'''dashed blue line'''</span> represents the theoretical minimum value of the cost function given by half the number of assimilated observations.]] | [[Image:cost_fig6.png||center|thumb|850px|'''Figure 6:''' The normalized cost function <math>J</math> computed within inner-loops (curves), and <math>J_{NL}</math> the cost function computed relative to the nonlinear model during outer-loops (open circles). The various cases shown are for a 7-day assimilation window: (30,1) no preconditioning ('''solid black curve''', '''black circles'''); (10,3) no preconditioning (<span style="color:#2E3192">'''solid dark blue line'''</span>, <span style="color:#2E3192">'''dark blue circles'''</span>); (10,3) and spectral preconditioning using 2 eigenvectors (<span style="color:#ED1C24">'''red curve'''</span>, <span style="color:#ED1C24">'''red circles'''</span>), (10,3) and Ritz preconditioning using 5 Ritz vectors (<span style="color:#00A651">'''green curve'''</span>, <span style="color:#00A651">'''green circles'''</span>); and 10 Ritz vectors ('''black dashed curve''', '''black asterisks'''). The horizontal <span style="color:#2E3192">'''dashed blue line'''</span> represents the theoretical minimum value of the cost function given by half the number of assimilated observations.]] | ||

The results from the same combinations of inner- and outer-loops and preconditioners using a 7-day assimilation window are shown in Fig. 6. In this case, some of the <math>J_{\rm NL}</math> values are missing at the end of the final outer-loop for some of the calculations because the initial condition generated by | The results from the same combinations of inner- and outer-loops and preconditioners using a 7-day assimilation window are shown in Fig. 6. In this case, some of the <math>J_{\rm NL}</math> values are missing at the end of the final outer-loop for some of the calculations because the initial condition generated by I4DVAR caused the model to blow-up. This is indicative that a 7-day assimilation combined with a large combined number of inner- and outer-loops may be pushing the tangent linear assumption beyond what is a reasonable limit. Figure 6 suggests that while <math>J</math> sometimes shows a significant improvement with preconditioning, the assimilation scheme is struggling to converge. The values of <math>\epsilon</math> for the eigenvectors and Ritz vectors in this case are consistently higher than for the 1-day and 3-day cases, so the uninspiring performance of the preconditioners in the 7-day case may be due partly to the lower accuracy of the available vectors and partly to violation of the tangent linear assumption. | ||

===Summary and Recommendations=== | ===Summary and Recommendations=== | ||

The preconditioned Lanczos-method based | The preconditioned Lanczos-method based I4DVAR scheme has been successfully implemented in the ROMS code. The experiments described above indicate that the algorithm is working correctly. Two types of second level preconditioning are available: so-called spectral preconditioning in which estimates of the Hessian eigenvectors are used; and Ritz preconditioning in which the Ritz vectors of the Hessian are used. Although not explicitly stated or obvious from the above discussion, the Hessian eigenvectors estimates and Ritz vectors are identical, so the only difference between the two approaches to preconditioning is the way in which the eigenvector information is used. As discussed in the [[#Spectral vs Ritz preconditioning|Spectral vs Ritz preconditioning]] section, the accuracy of the Hessian eigenvectors <math>{\bf u}_i</math> that are employed in the spectral preconditioner is a critical factor: using eigenvectors for which <math>\epsilon</math> is too large degrades the performance of I4DVAR. The Ritz preconditioner of [[Bibliography#TshimangaJ_2008a|Tshimanga ''et al.'' (2008)]], however, cleverly circumvents this problem by accounting for the formal error in the eigenvectors (now referred to as the Ritz vectors). | ||

While the above experiments are far from exhaustive, they do give some sense of the performance that can be expected from preconditioned | While the above experiments are far from exhaustive, they do give some sense of the performance that can be expected from preconditioned I4DVAR system. Based on these experiments, the following recommendations can be considered as providing useful guidance for configuring the ROMS I4DVAR system and choosing the preconditioner parameters: | ||

# For spectral preconditioning, it is recommended that the threshhold for acceptable accuracy of the Hessian eigenvectors estimates, [[Variables#HevecErr|HevecErr]] be chosen to be <math>\le 10^{-3}-10^{-4}</math>. | # For spectral preconditioning, it is recommended that the threshhold for acceptable accuracy of the Hessian eigenvectors estimates, [[Variables#HevecErr|HevecErr]] be chosen to be <math>\le 10^{-3}-10^{-4}</math>. | ||

Latest revision as of 18:08, 21 July 2020

| Variational Data Assimilation Menu |

|---|

| 1. Introduction |

| 2. Incremental 4D Variational Data Assimilation |

| 3. Analysis-Forecast Cycle Observation Impacts |

This memo describes a series of calculations using the new preconditioned version of the ROMS I4DVAR algorithm based on the Lanczos algorithm. This driver in ROMS is referred to as cgradient_lanczos.h and will become the default and only I4DVAR option that will be available and maintained for ROMS.

The conjugate gradient solver for ROMS I4DVAR is based on the Lanczos method as described by Fisher (1997, 1998). Two versions of preconditioning are available in ROMS I4DVAR: these are "spectral" preconditioning as described by Fisher (1998), and "Ritz" preconditioning as described by Tshimanga et al. (2008). The "spectral" and "Ritz" nomenclature used here follows that adopted by Tshimanga et al. (2008). The actual implementation of both preconditioners is based on the PhD thesis of Tshimanga (2007).

The model configuration used in all of the following experiments is the Intra-Americas Sea with resolution. Details of the model set-up, forcing, boundary conditions, and I4DVAR configuration are described in Powell et al. (2008). The multivariate balance operator is not used in any of these calculations, and only the initial conditions are adjusted. Familiarity with I4DVAR and its implementation in ROMS is assumed.

The Preconditioners

Following Tshimanga et al. (2008) the cost function can be expressed in incremental form as:

| (1) |

where represents the ROMS state-vector; is the state-vector increment for the outer-loop iterate; is the deviation of the background state-vector from the iterate; is the innovation vector with respect to the observations and the iterate; represents nonlinear ROMS state integrated to the observation times by NLROMS (), and interpolated to the observation locations by the observation operator (assumed linear for convenience); and denotes the corresponding time integrated and interpolated increment where the tangent linear model (TLROMS) is linearized about the interate, . The matrices and are the background error and observation error covariance matrices respectively.

Following Weaver et al. (2003), a first level of inner-loop preconditioning is performed via a transformation of variable, , hereafter referred to as v-space. By default, this first level of preconditioning is always done in ROMS I4DVAR. ROMS I4DVAR now provides the option to perform a second level of inner-loop preconditioning. This is activated using the logical flags Lprecond and Lritz in the s4dvar.in file. With Lprecond=.TRUE. preconditioning is activated according to the value of the flag Lritz. With Lritz=.FALSE. "spectral" preconditioning is performed, while with Lritz=.TRUE., "Ritz" preconditioning is used.

The second level of preconditioning is only active for (i.e. when more than 1 outer-loop is employed). During the first outer-loop only the first level preconditioner in v-space is used. When Lprecond=.TRUE., a new transformation of variable is performed in the inner-loops according to where, following Tshimanga (2007):

| (2) |

| (3) |

In (2) and (3), refers to the inner-loop and is the number of inner-loops used per outer-loop; are estimates of the eigenvalue/eigenvector pairs of the Hessian matrix ; are the Ritz value/Ritz vector pairs of ; and is the Lanczos vector for the inner-loop of outer-loop . The factor arises from the formal error in the Ritz vectors of the Hessian matrix and is given by , where and is the matrix of Lanczos vectors from outer-loop , the vector has zero elements except for the element which has a value of 1, and (Fisher, 1997).

If, for example, 3 outer-loops are used, then two second level preconditioners will be employed, and . The preconditioner is applied in outer-loop to convert from v-space to -space (), and is based on the Hessian eigenvectors or Ritz vectors computed during outer-loop . The preconditioner is applied in outer-loop and is based on the Hessian eigenvectors or Ritz vectors of outer-loop . The change of variable in this case is , and converts from -space to -space. In this way the second level preconditioners derived from each subsequent outer-loop are applied sequentially.

Illustrative Results

All of the following experiments use ROMS configured for the Intra-Americas Sea with 40~km resolution in which only SST and SSH observations are assimilated (Powell et al., 2008). Data assimilation windows of 1~day, 3~days and 7~days were used.

Spectral vs Ritz preconditioning

As noted by Tshimanga et al. (2008), the accuracy of the Hessian eigenvectors that should be employed during spectral preconditioning is an important factor controlling the behavior the preconditioner. If the are normalized to have unit norm, then formally, the error can be defined as where is the estimate of the largest eigenvalue of the Hessian matrix . Tshimanga et al. (2008) found that if eigenvectors with unacceptably large are used in the spectral preconditioner, the performance of the preconditioner can be significantly degraded. The same is true in ROMS as described below.

Figure 1 shows the value of computing using (1) for several different configurations of inner-loops and outer-loops for a 1 day assimilation interval. In Fig. 1, has been normalized by its initial value. For convenience, the notation is used where is the number of outer-loops employed, and is the number of inner-loops per outer-loop. The inner-loop count is rather than because the first inner-loop of each outer-loop acts as an important initialization step for the Lanczos vector sequence. The total number of iterations performed is .

The curves in Fig. 1 show the cost function computed using (1) using the increments from the inner-loops. However, a much more important practical indicator of the overall performance of the data assimilation system is the cost function, , computed directly from the NLROMS solution during each outer-loop. Using the previous notation:

| (4) |

The values of normalized are indicated in Fig. 1 as the color-coded symbols or *. The dashed horizontal line in Fig. 1 represents the theoretical minimum value of given by half the number of observations assimilated during the 1-day interval.

For reference, two unpreconditioned I4DVAR calculations are shown in Fig. 1 corresponding to (30,1) and (10,3). The remaining curves in Fig. 1 show the evolution of for (10,3) using spectral preconditioning and different numbers of the estimated eigenvectors of the Hessian matrix . In all cases, the solution is identical for the first 10 iterations because second level preconditioning is only applied beyond that point. The estimated accuracy varies between for and and for . Figure 1 reveals that as the number of eigenvectors used increases from 2 to 10, the values of and increase, indicating that using the less accurate vectors degrades the convergence rate of the cost function. This is in agreement with Tshimanga et al. (2008). The acceptable accuracy of the eigenvectors is controlled by the ROMS input parameter HevecErr, and the experiments in Fig. 1 suggest that HevecErr should be chosen to be . The best case shown in Fig. 1 corresponds to using 2 eigenvectors. On first inspection, Fig. 1 suggests that the unpreconditioned case (30,1) produces the best solution. This is certainly true for , however for the more important measure , the spectrally preconditioned (10,3) case using 2 eigenvectors is superior.

Figure 2 has the same format as Fig. 1 and shows instead the case where Ritz preconditioning is employed using different numbers of Ritz vectors. As discussed by Tshimanga et al. (2008), the Ritz preconditioner is less sensitive to the accuracy of the Ritz vectors that are used. This is also the case in Fig. 2 where increasing the number of vectors used leads to a continual increase in the rate of convergence of and , despite the fact that for each is quite large for . In fact, (10,3) using all 10 available is the best case in Fig. 2 both in terms of and .

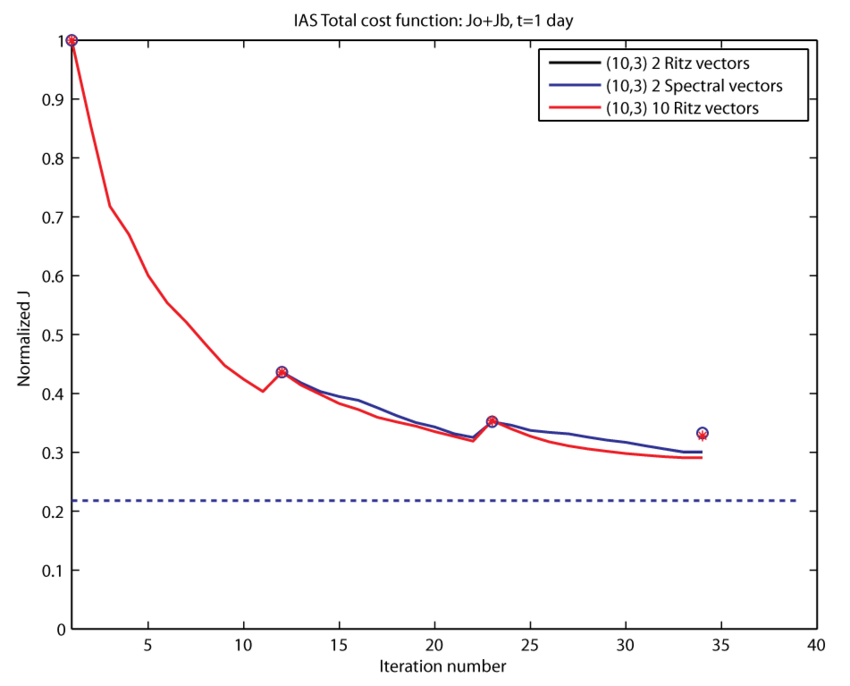

Inspection of equations (2) and (3) shows that the spectral and Ritz preconditioners are similar except that the Ritz preconditioner possesses an additional term which accounts for the formal error in the Ritz vector estimates. Therefore, if both preconditioners are restricted to using only accurate eigenvectors (i.e. small ), both should yield very similar results because the last term in equation (3) will be small. This is confirmed in Fig. 3 which shows and for (10,3) using spectral and Ritz preconditioning with 2 eigenvectors - the two cases are indistinguishable. Figure 3 also shows the (10,3) case using Ritz preconditioning and 10 Ritz vectors, and indicates that while is significantly smaller than the case using only 2 vectors, the percentage reduction in is not nearly as marked.

Ritz Preconditioning and Iteration Dependence

The results from the previous section indicate that Ritz preconditioning is generally superior to spectral preconditioning and more robust in the sense that it is less sensitive to the accuracy of the eigenvectors that are admitted to the preconditioners. In this section the sensitivity of the Ritz preconditioner to the combination of inner- and outer-loops is considered.

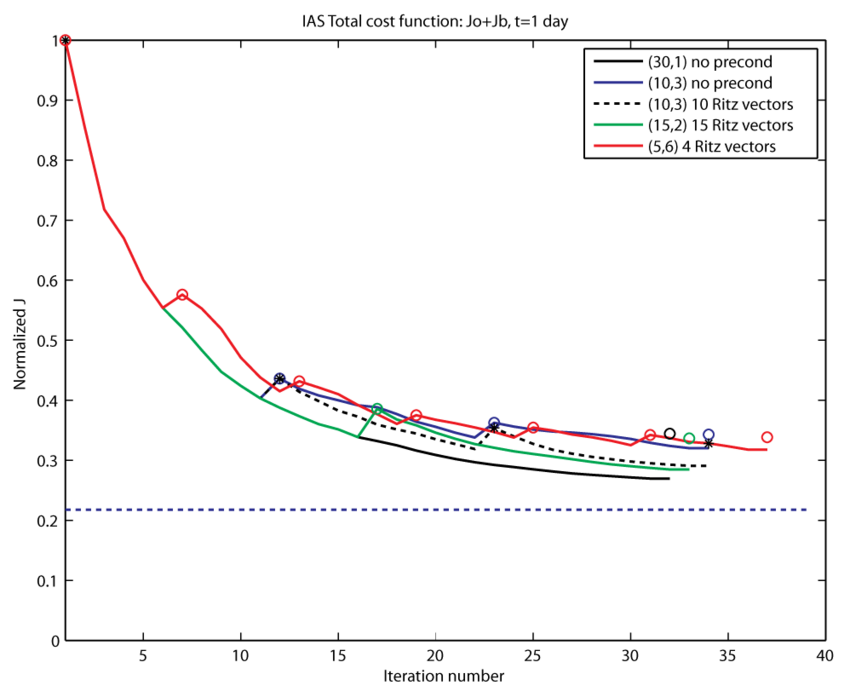

Figure 4 shows and for 1-day cases for which the total number of iterations is similar () but which employ different combinations of inner- and outer-loops. The computational effort is therefore comparable in all cases. The (30,1) and (10,3) reference cases that use no preconditioning are included in Fig. 4. The best case identified in section 3.1 was (10,3) with Ritz preconditioning using all 10 Ritz vectors from outer-loops and . This case is reproduced in Fig. 3, along with a (15,2) case and a (5,6) case. In the (15,2) case there are 15 Ritz vectors available for preconditioning during the 2nd outer-loop, and Fig. 4 shows that while is lower than the (10,3) case at the end of the experiment, the (10,3) case is still superior based on . During the (5,6) case there are potentially 6 Ritz vectors available, during outer-loops , but in general only 4 of them have sufficiently small to be useful. Each iteration of the Lanczos algorithm yields not only an additional Ritz vector, but also refines the estimates of those Ritz vectors identified during previous inner-loops. Therefore, decreases for all of the Ritz vectors as the number of inner-loops increases. Attempts to use all 6 Ritz vectors lead to significant degradation of and (not shown). Figure 4 shows the (5,6) case using 4 Ritz vectors which does not perform as well as (10,3) or (15,2) and is similar to the case with no preconditioning.

Figure 4 suggests that for a given computational effort (as dictated by the combined number of innner- and outer-loops), Ritz preconditioning is most effective with and . This reflects the trade-off between the increased accuracy of the available Ritz vectors with increasing number of inner-loops, and the benefits of updating the non-linear ROMS solution about which the inner-loop minimizations are performed.

Dependence on Assimilation Time Window

A subset of the experiments presented in the previous 2 sections were repeated using 3-day and 7-day assimilation windows. The results for the 3-day case are shown in Fig. 5. Figure 5 shows results from two spectrally preconditioned (10,3) cases, one employing 2 eigenvectors and the other 10 eigenvectors. As in the 1-day case, the performance of spectral preconditioning is degraded by using inaccurate estimates of the Hessian eigenvectors. The 2 eigenvector case, however, is superior to the (30,1) case with no preconditioning, but only marginally better than (10,3) case with no preconditioning. Two (10,3) cases using Ritz preconditioning and 5 and 10 Ritz vectors respectively are also shown in Fig. 5. The latter is the best case of all for .

The results from the same combinations of inner- and outer-loops and preconditioners using a 7-day assimilation window are shown in Fig. 6. In this case, some of the values are missing at the end of the final outer-loop for some of the calculations because the initial condition generated by I4DVAR caused the model to blow-up. This is indicative that a 7-day assimilation combined with a large combined number of inner- and outer-loops may be pushing the tangent linear assumption beyond what is a reasonable limit. Figure 6 suggests that while sometimes shows a significant improvement with preconditioning, the assimilation scheme is struggling to converge. The values of for the eigenvectors and Ritz vectors in this case are consistently higher than for the 1-day and 3-day cases, so the uninspiring performance of the preconditioners in the 7-day case may be due partly to the lower accuracy of the available vectors and partly to violation of the tangent linear assumption.

Summary and Recommendations

The preconditioned Lanczos-method based I4DVAR scheme has been successfully implemented in the ROMS code. The experiments described above indicate that the algorithm is working correctly. Two types of second level preconditioning are available: so-called spectral preconditioning in which estimates of the Hessian eigenvectors are used; and Ritz preconditioning in which the Ritz vectors of the Hessian are used. Although not explicitly stated or obvious from the above discussion, the Hessian eigenvectors estimates and Ritz vectors are identical, so the only difference between the two approaches to preconditioning is the way in which the eigenvector information is used. As discussed in the Spectral vs Ritz preconditioning section, the accuracy of the Hessian eigenvectors that are employed in the spectral preconditioner is a critical factor: using eigenvectors for which is too large degrades the performance of I4DVAR. The Ritz preconditioner of Tshimanga et al. (2008), however, cleverly circumvents this problem by accounting for the formal error in the eigenvectors (now referred to as the Ritz vectors).

While the above experiments are far from exhaustive, they do give some sense of the performance that can be expected from preconditioned I4DVAR system. Based on these experiments, the following recommendations can be considered as providing useful guidance for configuring the ROMS I4DVAR system and choosing the preconditioner parameters:

- For spectral preconditioning, it is recommended that the threshhold for acceptable accuracy of the Hessian eigenvectors estimates, HevecErr be chosen to be .

- For Ritz preconditioning, Ritz vectors of relatively low accuracy can be tolerated, so HevecErr is recommended.

- For any problem there will be a maximum acceptable computational cost which will dictate the total number of inner-loops and outer-loops that can be used. There is a trade-off between the number of inner-loops and outer-loops in that increasing the number of inner-loops yields more eigenvectors or Ritz vectors of acceptable accuracy for the preconditioner, while increasing the number of outer-loops allows more frequent updating of the NLROMS solutions which helps to drive down . The recommendation here is that the number of inner-loops be at least 10, and that the number of outer-loops be at least 3.

- The experience here is that a long assimilation window can degrade the performance of the preconditioner. This needs to be more fully explored, but is probably associated with violation of the tangent linear assumption when the assimilation window is excessively long.

![{\displaystyle {{\bf {P}}}_{j}=\prod _{{i=1}}^{m}[{{\bf {I}}}-(1-\lambda _{i}^{{{\frac {1}{2}}}}){{\bf {u}}}_{i}{{\bf {u}}}_{i}^{T}]~~{\text{for spectral preconditioning}}}](https://www.myroms.org/myroms.org/v1/media/math/render/svg/b3ed0d6280a67c15a813e23a21b53cbca9fbba71)

![{\displaystyle {{\bf {P}}}_{j}=\prod _{{i=1}}^{m}[{{\bf {I}}}-(1-\theta _{i}^{{{\frac {1}{2}}}}){{\bf {z}}}_{i}{{\bf {z}}}_{i}^{T}+\theta _{i}^{{{\frac {1}{2}}}}w_{i}{{\bf {z}}}_{i}{{{\bf {q}}}_{m}^{j}}^{T}]~~{\text{for Ritz preconditioning}}}](https://www.myroms.org/myroms.org/v1/media/math/render/svg/8e219b384b9a579573b02aaa612f0305c15a268b)

![{{\bf {u}}}_{i},~~i=[2,10]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/1f172f81f90fc53d084a6f784e195f376b9f61ec)

![k=[2,5]](https://www.myroms.org/myroms.org/v1/media/math/render/svg/a55a6734a5d65e1f995754c9300f2d3e2c2cad7c)